Ecommerce SEO Audit: Step-by-Step Checklist

Many e–commerce websites rely on organic traffic from search engines like Google, Bing, and Yahoo to obtain new customers, which is why a digital marketing strategy for an e–commerce store should allocate a good amount of resources to formulating and executing an SEO strategy.

An SEO audit is essential for an effective SEO strategy because an assessment of technical SEO, on-page SEO, off-page SEO, competition, and traffic of the website can reveal mistakes that prevent the website from ranking well, and opportunities that can help gain more visibility – this assessment will form the basis of the SEO strategy.

In this blog post, we explain what an e–commerce SEO audit is, why it’s important to regularly audit your e–commerce site, and how to implement an SEO audit for your e–commerce site.

- What is E-commerce SEO?

- What is an e-commerce SEO audit?

- Why is it important to regularly audit your website?

- How to implement an e-commerce SEO audit?

- Step 1: Technical SEO

- Step 1.1: Crawling and Indexing

- Step 1.2: Robots.txt

- Step 1.3: Response Codes

- Step 1.4: Redirect Chains

- Step 1.5: International SEO

- Step 1.6: XML Sitemaps

- Step 1.7: No Index and No follow meta tags

- Step 1.8: Faceted Navigation

- Step 1.9: Canonical Tags

- Step 1.10: Structured Data

- Step 1.11: Search Engine Friendly URLs

- Step 1.12: Site Architecture & Internal Linking

- Step 1.13: Page Speed

- Step 2: On-Page SEO

- Step 3: Off-Page SEO

- Step 4: Competitor Analysis

- Step 5: Traffic Analysis

- Step 1: Technical SEO

- E-commerce SEO audit checklist

What is E-commerce SEO?

E-commerce SEO is the process of improving the navigability of the website and the user experience.

It also has an impact on the experience search engines have when crawling the website to help increase the rankings and visibility of the website in the search engine results page (SERP) for keywords that potential customers are searching for.

This process can help increase the number of customers, the number of purchases made, and thus the total revenue generated on your e–commerce store.

However, an e–commerce SEO audit is required for an e–commerce SEO strategy to be effective.

What is an e-commerce SEO audit?

An e-commerce SEO audit is an in-depth analysis of an e–commerce website to identify the main roadblocks to the growth of a website’s organic traffic and conversions, then proposes actionable solutions to fix these issues.

Below is a list of data that is collected to form an e–commerce SEO audit.

- Website navigation data

- Website hierarchy data

- Website URL structure data

- Website content data

- Website user experience data (including load time)

- Website traffic data

- Website competitors’ data

- Google Search Console data

- Google Analytics data

- SEMrush data

- Screaming Frog data

- SE Ranking data

- Ahrefs data

The SEO audit will then form the basis of the SEO strategy where the most important fixes will be prioritised over the next months.

Why is it important to regularly audit your website?

Performing an SEO audit on a regular basis (once every four months or twice a year otherwise) is the best way to ensure that your current SEO strategy is improving the visibility of the website in the SERP, conversions, and total revenue.

If not, then the SEO audit will provide useful information on how to adjust the SEO strategy for better results.

Keep reading to find out what an e-commerce SEO audit is made up of and how to implement it into your e–commerce store to ultimately improve the visibility of your brand in the SERP.

How to implement an e-commerce SEO audit?

We know that the study and analysis behind an e–commerce SEO audit can be very challenging, especially because it involves many tasks coming from different areas. To help you through the process we listed all the main aspects to consider in a simple way by creating an e–commerce SEO audit checklist.

Below are the main analyses that make up an e–commerce SEO audit.

- Technical SEO

- On-page SEO

- Off-page SEO

- Website Traffic

- Website competitors

Step 1: Technical SEO

Technical SEO is a crucial part of any e-commerce website’s SEO strategy. It involves optimising elements of your site such as page speed, structure, and schema markups to improve user experience and ensure that the website pages can be easily found and indexed by search engines. This can help boost organic traffic from these sources which could improve the number of conversions.

Technical SEO is also important for keeping up with Google’s ever-evolving algorithms, as well as ensuring that your site remains secure and compliant with their guidelines.

By investing in technical SEO, you can make sure that your e-commerce website runs smoothly and efficiently, giving customers an optimal experience while boosting your visibility in the search engine results.

Step 1.1: Crawling and Indexing

In SEO, crawling is the discovery process in which search engine robots (also known as web crawlers or spiders) systematically find web pages on a website. While indexing is when search engine bots crawl the web pages and save a copy of all the information on their index servers.

The crawling and indexing of your web pages should be made easy for search engine robots so that the web pages can be shown on the SERP for the relevant search query. This is why checking the crawlability and indexing status of your website an integral part of an SEO audit.

Screaming Frog can be used to find crawling issues on your e–commerce site. Google Search Console provides insight into your site’s crawlability and indexing, specifically the “Page Indexing report” will inform you about the web pages on your site that are currently indexed.

It is important you know that there are some pages on your e–commerce store that should not be indexed as they do not contribute to the rankings within the SERP.

Examples of pages that do not contribute to the website rankings include login, account, cart, checkout, internal search results and filter pages. These pages unnecessarily increase the crawling load because they do not need to be indexed. Filter pages and internal search results pages can even create duplicate and low-quality pages that should not be in the SERP.

As a result, make sure you identify these pages when you are in the page report section of Google Search Console.

Now that you have found the pages that should not be indexed, how should you go about removing them?

Well, there are several ways to combat this:

- Block search engines from crawling these pages by using the robots.txt file.

- No index the pages using meta tags.

Step 1.2: Robots.txt

A technical SEO audit includes the analysis of the robots.txt file, which is a file that instructs search engine robots about the pages you want or do not want to be crawled and indexed.

By typing “https://domain.com/robots.txt” in the Google search bar, you can check your website’s robots.txt file and decide whether you need to add rules to allow or disallow search engine robots from crawling and indexing certain pages.

Step 1.3: Response Codes

A HTTP status code is a three-digit code response the server sends when a request made by a client (browser or search engine) to access the URL can or cannot be fulfilled.

The most common HTTP status code classes are listed below.

- 200 OK status code: the requested URL was successful

- 301 status code: requested resource was permanently moved to another location

- 302 status code: requested resource was temporarily moved to another location

- 404 status code: requested resource could not be found

- 410 status code: the requested URL was permanently removed

- 503 status code: server is temporarily unavailable, and will be available later

Crawling your website using Screaming frog to check the response codes your web pages are returning is an important part of a technical e–commerce SEO audit.

The web pages on your website should return a 200-response code allowing users and search engine crawlers to access the pages.

A website with many 4XX series or 500 internal server errors will affect the rankings of the entire website because users that cannot find the pages they are looking for will click off the page in frustration, increasing the bounce rate and thus reducing the quality of your e–commerce site.

Additionally, many errors use up crawl budget while simultaneously passing ranking power or “link juice” onto broken pages, which also affects the website’s quality and ability to get pages indexed and ranked at the top of the SERP.

Therefore, it is important to identify these issues and take appropriate action to fix them.

Step 1.4: Redirect Chains

A redirect chain can hinder your technical SEO efforts because a redirect chain is when a source URL passes through multiple redirects to get to the target URL.

An example of a redirect chain would be www.mysite.com/responsive redirects to www.mysite.com/responsive-web-design, which then redirects to www.mysite.com/rwd.

Having multiple redirect chains across your website can cause lost link equity, increased page load time, and delayed crawling, which is why redirect chains must be fixed.

To fix a redirect chain, you must remove unnecessary redirects in the chain and make sure that there is only one 301 redirect that redirects the initial URL to the target URL.

Step 1.5: International SEO

International SEO is the process of optimising a website to increase the visibility and rankings in different countries and languages.

As a result, e–commerce websites can expand their reach to potential customers across the world, which can help increase brand exposure and sales in different markets.

Incorporating language codes into the URL structure and using the Hreflang attribute are both ways in which you can implement international SEO on your e–commerce website.

URL Architecture

Different URL structures can be used to target content to users in specific markets with the correct language. The most common URL structures are listed below.

- Country code top-level domains (ccTLD): example.us

- Global top-level domains (gTLDs): us.example.com

- Sub-Directories: example.com/us

- example.com/?lang=en-us

- exampleussite.com

Many experts use sub-directory targeting because all the authority that the root domain has built will be passed to pages within the sub-directory and will help increase the overall authority of that directory. This will help increase the visibility and rankings of the website in the targeted market and language.

Nevertheless, each of these URL structures will be interpreted differently by search engines so having an awareness of the pros and cons associated with each URL structure is vital to ensure that it aligns with your future SEO strategy.

Hreflang Attribute

Hreflang tags is another way to implement international SEO.

Hreflang annotations in HTML documents help search engines show content to the correct users based on their location, browser language preference, or both – search engines will be notified of multiple versions of a single page if it is available at another URL translated into another language.

Hreflang is great for SEO because it helps avoid penalties associated with duplicate content and improves user experience.

Step 1.6: XML Sitemaps

Sitemaps are XML files that list all the pages on your website, which helps search engine crawlers to understand the website structure and quickly find all the content on it.

A sitemap is a file where you give information about the pages, other files, and videos on your website, and how they relate to each other.

Search engine robots look at this file to crawl your website more efficiently allowing your content to be crawled and indexed faster.

As part of a technical e–commerce SEO audit, you must check whether you currently have a robust sitemap for your e–commerce site by looking at root directory of your website e.g. /sitemap.xml.

If you do not have valid sitemap, then you should submit a sitemap as part of your SEO strategy.

Below are 4 standard practices that should be done with your sitemap.

- Only include pages that return a 200 OK response – search engines begin ignoring sitemaps when there are more than a couple percentage points of pages that return a status other than a 200 OK response

- Break your sitemap into multiple sitemap categories if you have a large site with 10,000+ pages – this will ensure that you do not exceed the size limit

- Continually update your XML sitemap(s) so they remain clean

- If you have multiple XML sitemaps, create a sitemap index file which contains links to the individual XML sitemap files

Below are 6 common mistakes you should avoid when implementing or updating your sitemap.

- Create a sitemap file larger than 10mb unzipped

- Include more than 50,000 URLs in 1 XML sitemap file

- Include internal search result pages

- include error pages (3XX, 4XX, 5XX series errors)

- Include duplicate pages

- Include pages which are blocked in robots.txt

Step 1.7: No Index and No follow meta tags

A meta tag is a portion of HTML code you put on a page to help Google decide how to read and prioritise information on a webpage. In some cases, meta tags can tell Google not to index a specific page (noindex) or when not to pass link equity/ranking power between linked pages (nofollow).

“noindex” tags can prevent the crawling and indexing of unimportant pages like filter pages, which will free up the crawling budget for more important pages allowing them to be indexed and ranked in the SERP.

However, “noindex” tags can prevent the indexing of important pages if they are setup incorrectly whilst “nofollow” attributes can be placed on external links to authoritative sites, resulting in a missed opportunity to show E-A-T (expertise, authoritativeness, and trust).

Therefore, as part of a technical SEO audit you must check that the “noindex” tags are present on the pages that you do not want ranking in the SERP, and that you “nofollow” the correct links.

Step 1.8: Faceted Navigation

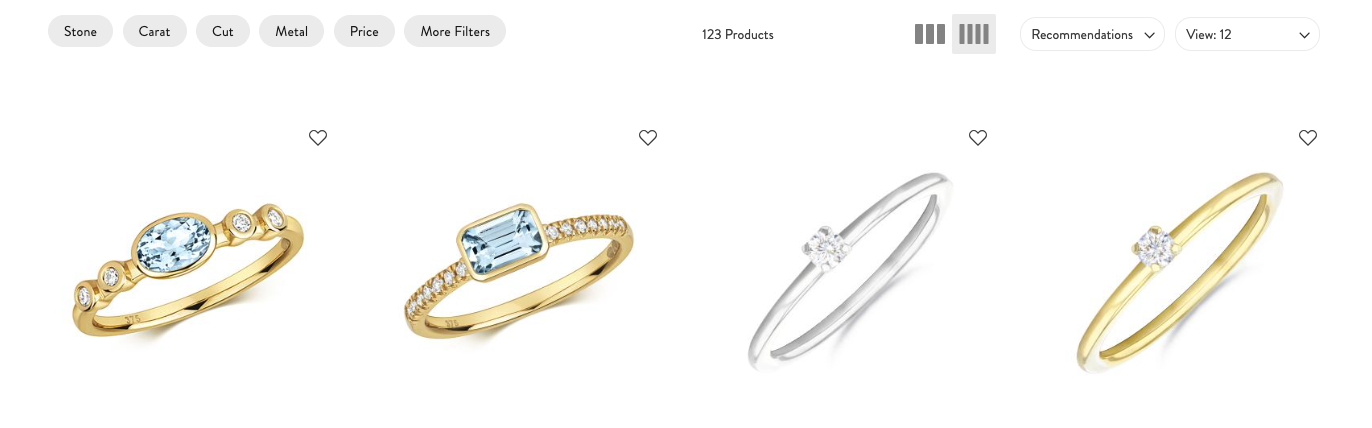

Faceted navigation is the way in which your online store allows users to filter and sort results based on what they are looking for.

For example, when a user is looking for a ring on a jewellery website, they may filter the products based on the stone, carat, cut, or price to help them get to the product faster:

This is great because it improves user experience. However, you risk running into duplicate content where every filter type creates a new page that is identical to the main category page.

Why is duplicate content bad?

Well, duplicate content is not good because Google prefers unique content so will not rank pages with duplicate content.

Therefore, you must check whether the product filter pages have been blocked from search engines by using the robots.txt file, possess “noindex” tags, or have been canonicalised to the main category page.

Step 1.9: Canonical Tags

A canonical tag is a portion of HTML code that helps search engine robots understand the “main” version of a page from a group of identical pages.

As a result, the correct page ranks in the SERP, link equity is consolidated from duplicate pages, and the overall crawling and indexing of your website is improved.

The pages on an e–commerce site usually possess a self-referential canonical tag telling search engine robots that the page with the canonical tag is the “main” version of the page.

However, filter pages should not have a self-referential canonical tag as they are duplicate pages so instead should have a canonical that points to the main category page.

These canonical tags should only point to indexable URLs and each page should only have one canonical tag. These must also be checked as part of a technical SEO audit.

Step 1.10: Structured Data

Structured data, also known as schema markup, is a portion of code placed on a web page that helps search engine robots understand the contextual information of the page and to generate the correct rich SERP snippet.

This ensures the correct matching of search terms to the product and collection pages present on your website, increases click-through rate, and thus increases organic traffic.

Below is a list of the main and most important schema for an e–commerce website.

- Video schema

- Breadcrumb schema

- FAQs schema

- Offer schema

- Navigation and search schema

- Rating and review schema

- Product schema

Product schema is the most common type of structured data as it contains essential information about the product such as price, availability, and whether the product is on offer or not.

Breadcrumb schema is another important schema markup as it helps users and search engine bots understand how individual product pages fit into the website’s hierarchy.

As a result, it is important to check that every product page has appropriate product schema and that every schema present on the website is implemented correctly.

Step 1.11: Search Engine Friendly URLs

URLs are not the most important ranking signal, but they provide search engines with some context to what the page is about, allowing them to reliably rank a page for the correct search terms making them worth a check.

Below are some best practices to adhere to when creating your URLs.

- Use keyword-rich URLs over dynamic variable URLs (as they have a 45% higher click-through rate)

- Use dashes instead of underscores as word separators

- Avoid the use of extraneous characters such as &, @, etc

- Create a URL that is as short as possible

Step 1.12: Site Architecture & Internal Linking

Website architecture is the hierarchical structure of your web pages, which is mainly reflected through internal linking.

Below are the benefits of a logical website structure.

- Helps users easily find products and information

- Helps search engine bots find, index and rank all the different web pages

- Gives your website the opportunity to appear in the SERP as sitelinks

- Spreads link equity (authority) between web pages (via internal links)

Therefore, checking your e–commerce site structure is a must when doing an SEO audit. Below are five things you should look at for when checking it.

- Ensure there is a logical hierarchical structure where the homepage is at the top, followed by categories, subcategories, and product pages

- Ensure that the click depth is a maximum of four clicks – any deeper and both users and search engines will struggle to find those pages

- Use your competitors’ site architecture as a reference point to understand whether yours is good

- Ensure that your internal links are working correctly

- Ensure that important pages are not orphaned by adding internal links to them

Step 1.13: Page Speed

A slow page speed means that search engines crawl fewer pages using the allocated crawl budget, which could negatively impact the ability of your pages to get indexed and thus hinder your SEO efforts.

Page speed is also important for user experience. Higher bounce rates (users who visit your website and leave quickly) are associated with pages that have a longer loading time or worse navigation experience.

Higher bounce rates have also been shown to negatively impact conversions – a 7% reduction in conversions can be seen if there is a delay in page response by one second. Therefore, it is important to ensure that your pages load very quickly.

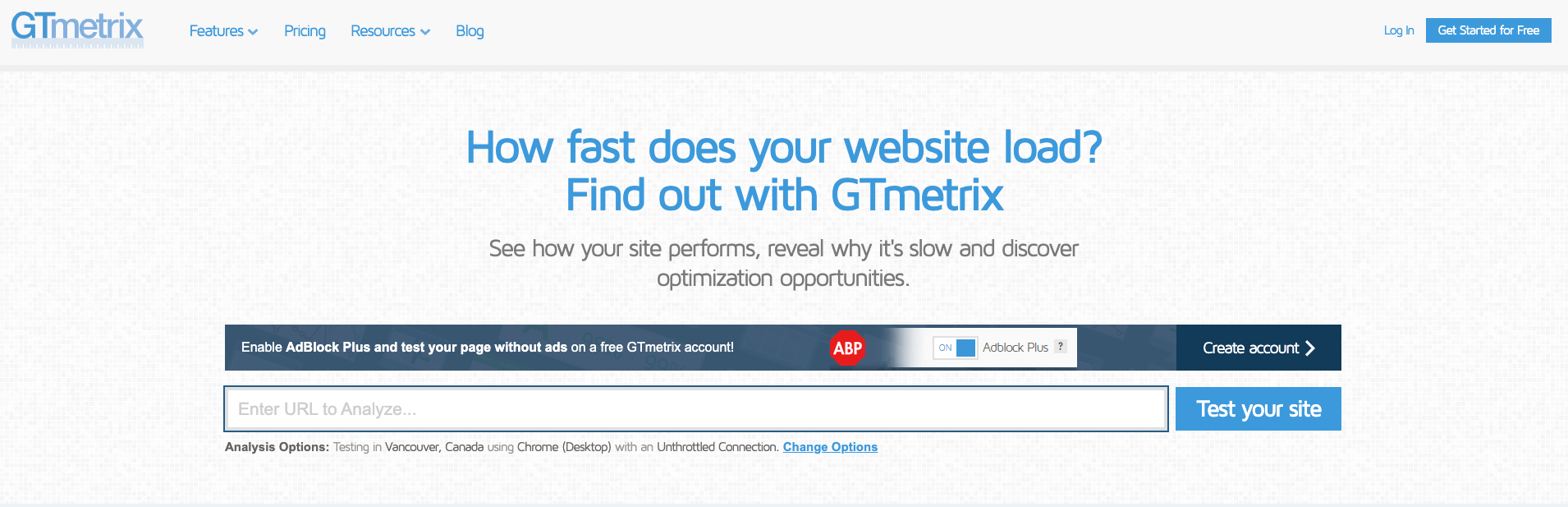

Subsequently, it is important to check how quickly your pages load, especially the homepage, and some representative pages from category pages and product pages. If you want to test your page speed, then head on over to GTmetrix and enter your page URL as seen below.

Your goal is to get your pages to a GTmetrix Grade A.

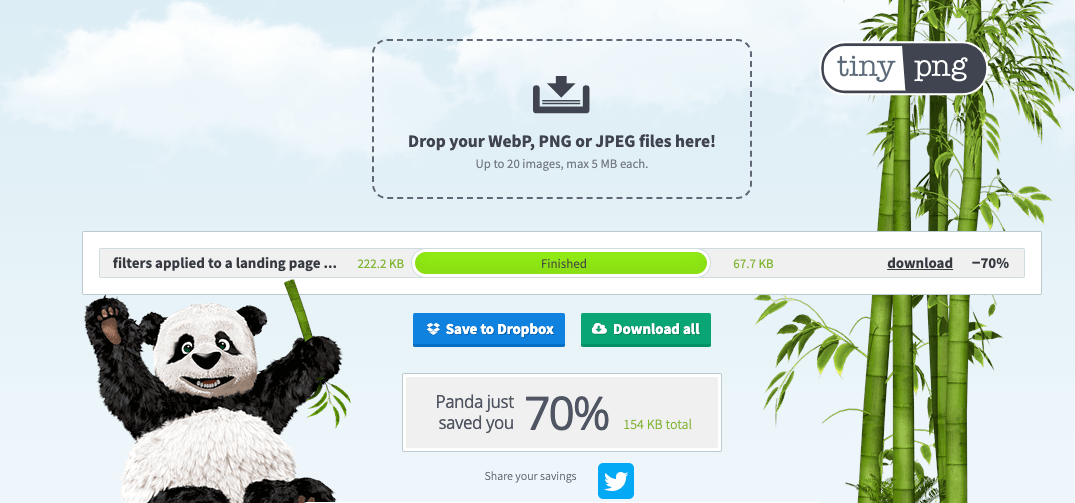

If you get below a grade A, you will need to make the necessary adjustments to your pages. The major item to optimise will be to compress your images.

A useful tool to compress your images before uploading them is https://tinypng.com/.

This service will allow you to simply drop your image files into the allocated box, and will go ahead and compress it for you:

You also can use Google’s free page speed insight tool, which will generate a report that gives you key insights such as delays that come from an intensive use of Javascripts at loading time, delays at the server side, and even images with a dimension bigger than 100kb – this affects page speed.

Once your page speed is where it should be, you should start seeing improvements in the number of indexed pages, and a reduction in bounce rate, which will in turn help with your rankings in the SERP.

Now that you have evaluated the strengths and weaknesses of your website from a technical standpoint, you must now start analysing the content and appearance of your web pages through on-page SEO.

Step 2: On-Page SEO

On-page SEO is the process of optimising the content and appearance of the web pages to improve rankings for specific keywords, user experience, and conversions – this can be from the top-level category pages to the subcategories and product pages.

Step 2.1: Title Tags

A title tag is an HTML code tag that provides a page with a title. Title tags are very important for on-page SEO because they are one of the first things a search engine crawler looks at when it crawls a page.

Crawling the title tag first helps the crawler understand what the page is about, which ensures that the page shows up in the SERP for the relevant search term.

Title tags are also important because they are the first thing a user sees in the SERP, which can influence whether they click on your page. Therefore, title tags can help improve your rankings and user experience.

When you analyse the title tags of your web pages, there are four requirements that must be met for them to be optimised for users and search engines, which are listed below.

- Ensure your title tags are 40-60 characters

- Ensure that your title tags clearly describe the content of the page.

- Ensure your title tags are unique on the SERP and on your site

- Use keywords in the title tag without keyword stuffing

- Use patterns and punctuation in your titles to guide the reader

- Ensure your title tags are clear, concise, and honest

Step 2.2: Meta Description Tags

Meta description tags are no longer a ranking factor but are useful for both the user and website crawler because they provide a short summary of the page content.

This is because a well-written meta description can be seen as part of the search snippet in the SERP.

This acts as a pitch to convince the user that the page matches exactly what they are looking for, which can increase clicks.

Additionally, a good meta description with the right keywords could also help a crawler to efficiently collect information about the page and to understand how relevant it is.

You should ensure that your meta descriptions are better optimised by being more descriptive and including secondary keywords.

Step 2.3: H1 Headings

A H1 tag is an HTML heading that is used to mark up a web page’s main subject. A title tag will show up on the SERP and browser page whilst a H1 tag doesn’t show up on SERPs but is what users view on the page.

The H1 heading is important because it helps search engines better understand what the page is about. It also improves user experience (UX) as visitors can quickly determine what the page is about without having to spend too much time.

Ensure that you use just one H1 tag per page. This provides your pages with a logical structure, which will allow website crawlers to better understand your pages.

Also, ensure that you include the main keyword that you are trying to rank for as Google can use your H1 to assess whether the content on the page is a good match for a search query.

Step 2.4: Duplicate Content and Cannibalisation

Duplicate content is when you have pages on your site that have the same or very similar body content, title, or meta descriptions.

As we mentioned earlier in this article, duplicate content is not good in the eyes of search engines like Google as it is not considered as helpful content, which leads to indexing issues.

Additionally, Google chooses one content to rank per keyword so if there is duplicate content or content optimised for the same keyword then you run the risk of both not ranking – cannibalisation.

Therefore, you should check whether your website is free of duplicate content.

Step 2.5: Pages Review (Content quality)

Reviewing the pages on a website such as the homepage, landing pages, blog posts, and product pages is important because it highlights areas in need of optimisation.

The homepage receives the highest number of clicks and unique visits from the SERP so should be optimised with content, internal links, and calls to action to inform the users and drive their journey inside the website offering the best possible user experience.

Landing pages are important because users normally land on them first so, landing pages should be optimised for SEO, target relevant keywords, and have appropriate filters to make navigation easier for users.

Reviewing the blog post pages allows you to identify pages with low content, content gaps, and potential cannibalisation, which are all things that can be fixed.

The product pages can also be optimised using relevant keywords in the description, which will help improve the page’s visibility.

Step 3: Off-Page SEO

Off-Page SEO covers all the actions that can bring a backlink to your website from another website with high authority.

If done properly, off-page SEO can help improve domain authority as you have more Expertise, Authoritativeness, and Trustworthiness (E-A-T) in the eyes of search engines, which in turn will lead to better rankings within the SERPs.

Additionally, it will increase the domain authority (DA) as well as clients’ trust in your business.

So, without further ado let’s look at backlinks and how you can implement them onto your website.

Step 3.1: Backlinks and Link Building

In simple terms, link building is about getting links to your pages from other high authority websites.

Pages with many backlinks from high authority websites, will be seen as authoritative in the eyes of Google thus helping those pages rank higher within the SERPs.

You can use SEMrushs’ backlink audit tool to check the backlinks pointing to your website – ensure that there are no backlinks that are toxic as this can negatively affect your website.

If you find that your site has many toxic backlinks, then you should remove them through Googles’ disavow tool.

You can also create backlink opportunities by identifying and building relationships with other websites that are relevant to your niche.

Take note of any websites or pages referring to your business and make sure they have a link pointing back to you.

Step 4: Competitor Analysis

Competitor analysis is when you identify your competitors and analyse their strengths, weaknesses, and most importantly their SEO strategies.

Competitor analysis is important because the findings from your research will allow you to improve your own SEO strategy and thus dominate your competitors.

For example, through competitor analysis, you may find new keywords, content ideas, and link building opportunities that were previously not at the forefront of your mind. Subsequently, this will lead to improvements in your site’s visibility in the SERP.

Step 4.1: Identify Your Competitors

Firstly, you must identify your competitors, which are websites that have a high ranking for the target keywords in the SERP.

A good place to start is by using SEMrush’s Organic Research Tool, which will allow you to find your main organic competitors.

Alternatively, you can simply google your target keywords to see the websites that show up on the first page of the SERP.

Always bear in mind that not every competitor is not your true competition, your real competitors are the sites that sell similar products or offer similar services.

Step 4.2: Identify Keyword Gaps

Once you have identified your competitors, you must identify keywords that you do not rank for, but your competitors do.

You can use SEMrush’s Keyword Gap tool to find these keywords.

It is very important that you analyse every keyword individually as not all the keywords will be relevant to your website.

Step 4.3: Identify Strengths & Weaknesses

Identifying the strengths and weaknesses of your competitors is essential to improving the visibility of your website in the SERP.

You must carefully analyse the competitors’ website structure, content layout, page layout, user experience, services, and calls to action.

This allows you to pick up on their weaknesses and improve them, replicate their strengths, and even come up with innovative ways to do things on your own website.

Step 5: Traffic Analysis

Monitoring the websites’ traffic should be a continuous process on any web project’s road to success.

SEMrush and SE ranking can be used to monitor traffic, but Google Search Console (GSC) and Google Analytics are the most powerful because you can see exactly where the traffic is coming from.

Google search console mainly provides information about what is happening in the SERP of Google, while Google analytics provides information about internal navigation and traffic coming from the SERP and other sources.

This way you can observe how your website is doing and whether your SEO efforts are working or not, which will allow you to adjust your SEO strategy in accordance with the data you receive.

Step 5.1: Google Analytics

Any drops, spikes, or patterns in website traffic can be observed in Google analytics, which will place you on the right path to determining the cause and thus the appropriate solution.

For instance, a decline in traffic would cause you to check the traffic in relation to pages that have dropped in position within the SERP, or pages that have been de-indexed within GSC, which wouldn’t have been noticed otherwise.

Through Google Analytics, you can check exactly where your traffic is coming from such as organic search, organic social, referral (backlinks), and direct – this is crucial to see whether your SEO efforts are improving your organic search traffic.

Google Analytics can also allow you to check landing pages, specific goals, and events, which can provide you with useful data.

Step 5.2: Google Search Console

Google search console can also be used to assess any changes in traffic. It is very useful because you can go into google search console to check the number of impressions, clicks, and rankings over a certain period.

GSC also allows you to check the status of pages such as whether they have been indexed or not.

Additionally, you can create customisable reports showing information about the behaviour of different pages on the SERP such as the top traffic pages, the sitemap, the user experience, and many other useful things.

This will just add to the data you already have allowing you to make a more informed decision on what to do next.

E-commerce SEO audit checklist

Technical SEO audit

- Check how crawlable and indexable your website is

- Check your robots.txt file

- Check the response codes your site is bringing back

- Check for any redirect chains

- Ensure your site is optimised for International SEO (if applicable to your business goals)

- Check the XML sitemap

- Ensure “no index” and “no follow” tags are present on the appropriate pages

- Check faceted navigation (ensure filter pages are blocked from being crawled and indexed)

- Ensure the correct canonical tags are present for each page on your site

- Ensure appropriate structured data is used and implemented correctly

- Check whether your URLs are both user and search engine friendly

- Review the website architecture and internal linking

- Ensure page speed is optimised

On-page SEO audit

- Ensure your title tags are optimised for both users and search engines

- Review your meta description tags

- Check the H1 headings for your web pages

- Check for duplicate content and cannibalisation

- Review your pages for content quality

Off-page SEO audit

- Ensure there are no toxic backlinks pointing to your website

Competitor analysis

- Identify your competitors

- Identify keyword gaps between your site and the competitors

- Identify competitors’ strengths and weaknesses

Traffic analysis

- Look at your website traffic using Google analytics 4 (GA4)

- Review your website traffic using Google Search Console (GSC)

Final Thoughts

In conclusion, an SEO audit is the foundation from which an effective SEO strategy is built on, which is why an audit is so important.

Once you have an SEO audit, you must create a list of the identified issues then prioritise them based on a graded system that highlights how critical each issue is and explains how difficult it is to solve this including the findings from the competitor and traffic analysis.

You will also need to have a realistic timescale to solve these problems. This is the best way to construct a road map to an effective SEO strategy that will give your e-commerce website the traffic that you have been looking for.

Don’t forget that as important as it is to follow this guide so that you are equipped with the knowledge to implement the best SEO practices onto your website, the marketing plans and goals should also be carefully considered.

It can be quite a daunting and challenging task, which is why we provide e–commerce SEO audit services to those of you who want to achieve this compromise between the best SEO practices and marketing plans, to ensure that the brand fits into the SERP with its uniqueness in the most efficient way.

All in all, this blog post has given a concise overview of the basics of an SEO audit making this guide the perfect starting point for you to construct an effective SEO strategy in the future.

Post navigation

Read More Blogs

Maintaining Data Quality in your EPoS

For many businesses, their Electronic Points of Sale (EPoS) can be a key aspect

Getting REST API Credentials from the Developer Portal for your Clover Payment Gateway

In this guide, we walk you through the steps to retrieve REST API keys and